6月 09, 2021

1916

When we talk about the internal storage structure of the database, people will think of B-tree or B+ tree, but we will not talk about the principles of these data structures here, we will show why these data structures are suitable as the internal structure of database storage and use these The purpose of the data structure.

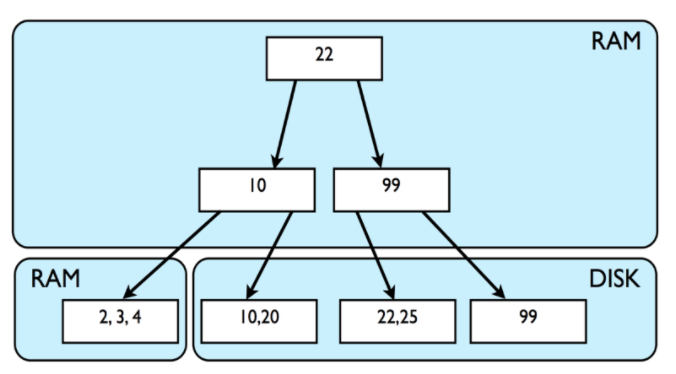

Traditional relational data stores data on disk in the form of B-trees, and they also use B-trees on RAM to maintain indexes of these data to ensure faster access speed. The inserted rows are stored on the leaf nodes of the B-tree, and all intermediate nodes are used to store the original data used for navigation query statements. Therefore, when millions of data are inserted into the database, the index and data storage will become very large. Therefore, for fast access, all data needs to be loaded from the disk to the memory, but RAM generally does not have such a large space to store all the data. Therefore, the database must read part of the data from the disk. This scenario of loading data is shown in the figure below:

The B-tree is designed to store data in the form of blocks, because the operating system reads data from a block much faster than reads individual bytes of data. The block size of MySQL's InnoDB storage engine is 16KB. This means that every time you read or write data, a block of 16KB data will be loaded from the disk into the RAM, it will be written with new data, and written back to the disk again. Assuming that each row of data in the database table is 128 bytes (the actual size will vary), a block (leaf node) is 16KB, and (16*1024)/128=128 rows of data are stored. The height of the B-tree is generally less than 10, but the number of nodes in each layer is large, which can manage tens of thousands of data. Based on the above characteristics, B-tree is suitable as an internal storage structure for data.

Therefore, the read operation on the B-tree is relatively fast, because the operation only needs to traverse some nodes and make a small number of disk I/O requests. Moreover, the range query is faster because the data can be retrieved and manipulated in the form of blocks. You can perform the following operations to improve the performance of the B-Tree-based database:

Reduce the number of index nodes: This is a common strategy to improve the performance of relational databases. The more indexes there are, the more indexes need to be managed for insert and update operations. When the database data runs longer and longer, you need to delete some old or useless indexes, and carefully add new indexes. But you should also be aware that fewer indexes means poorer query performance, and you need to make a trade-off between query performance and insert update performance (Translator's Note: You can consider the database read-write ratio).

Sequential Insertion: If you can insert a large amount of data sequentially based on the size of the primary key, the speed of inserting data will be very fast. Because the block to which the inserted row belongs is already in the memory during the insertion process, the database can directly insert the row into the data structure of the memory, and then submit it to the data disk through a disk I/O. Of course, these all depend on the specific implementation of the database, but I think modern databases generally carry out similar optimizations.

But B-tree is not the optimal storage structure for all scenarios. The performance of write operations to the B-tree structure is very poor, worse than random read, because the database must load the page corresponding to the data from the disk, then modify it and flush the new write to the disk. Random writes have an average write speed of 100 bytes per second. This limitation is due to the basic working principle of disks. In fact, simply relying on the use of cache, index search and more memory can handle more read operations, but it is more troublesome to deal with more write operations. When you need to write or update a large amount of data, the B-tree structure is not the most correct choice. For a long time, traditional databases have undergone a lot of optimizations. For example, InnoDB tries to use buffering to reduce disk I/O operations. The specific operation is as follows:

Database write operations can increase the speed by increasing the bandwidth of the disk, but the current relational database does not do this. And relational database management systems are generally very complicated, because they use locks, concurrency, ACID transactions and other operations, which make write operations more complicated.

In today's information age, in customer-centric services such as messaging, chat, real-time communications, and the Internet of Things, and distributed systems with large amounts of unstructured data, millions of write operations are performed every hour. Therefore, these systems are write-oriented systems. In order to meet the needs of these systems, the database needs to be able to insert data quickly. Typical databases cannot meet similar scenarios well because they cannot cope with the requirements of high availability, ultimate consistency as much as possible, flexibility of unformatted data, and low latency.

The LSM tree (LogStructuredMergeTree) came into being. LSM is not a data structure similar to a B-tree, but a system. In the LSM system, there is no in-place replacement of data; once the data is written to the disk, it will never be modified. Obviously, it is a write system that can only be appended (appendonly). Some log-structured file systems such as ext3/4 also use similar principles. Therefore, the system is like a sequential data log. Basically, the LSM system takes advantage of sequential writing. The write operation of the traditional disk drive can reach up to 100MB/s, and the speed of the modern solid-state hard disk is faster in sequential writing. In fact, SSD drives have some built-in parallel mechanisms to allow it to write 16 to 32 MB of data at the same time. The characteristics of the LSM tree and the solid state drive are very matched. Sequential writing is much faster than random writing.

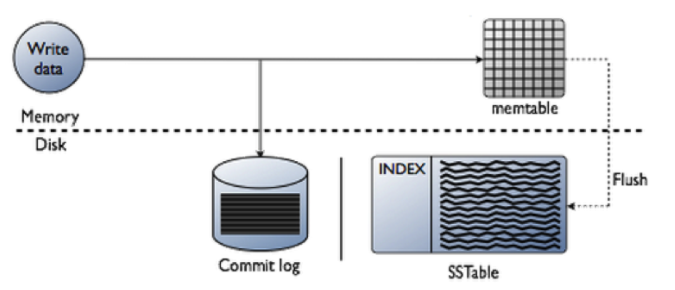

In order to correctly understand the above scenario, let us briefly look at how Facebook's Cassandra database uses the LSM principle.

Cassandra or any LSM system will maintain one or more memory data structures (such as memtable in the above figure) used to store data before writing to disk, such as sub-balanced trees (AVL), red-black trees, B-trees, or jump tables . The memory data structure maintains a sorted data set. Different LSM implementations mutually use different data structures to adapt to different needs, and there is no standard LSM implementation. When the data stored in the memory exceeds the configured threshold, the data stored in the memory will be placed in the queue that will be written to the disk. In order to flush data, Cassandra sequentially writes sorted data to disk. The disk maintains a data structure called "SSTable" (SortedStringsTable). This data is an ordered snapshot of the data written to the file. SSTable is immutable. The LSM system can manage multiple files on the disk.

Therefore, if the data is not found in the memory, Cassandra needs to scan all SSTables on the disk to search for the data. Therefore, Cassandra read operations are relatively slower than write operations, but there are some methods that can be handled. Cassandra or other LSM systems will run compression programs in the background to reduce the number of SSTables. The compression program merges and sorts the SSTable, inserts the new sorted data in the new SSTable, and deletes the old SSTables. However, the use of compression programs sometimes cannot cope with the millions of update operations in the database.

Therefore, some probabilistic data structures (probabilisTIcdatastructures) such as Bloomfilters are used to quickly determine whether some data exists in the SSTable. Bloomfilters is very suitable for judging the data in the memory, because it requires a large number of random queries to make a probabilistic judgment on whether the data exists. Bloomfilters algorithm can greatly reduce the cost of traversing and querying SSTables. Therefore, the LSM system solves the problem that writing operations in big data takes a lot of time. The LSM system also has the problem of ReadamplificaTIon-it will read more data than it actually needs. Therefore, is there a solution between BTree and LSMTree to give us the best (not necessarily accurate) reading and writing efficiency?

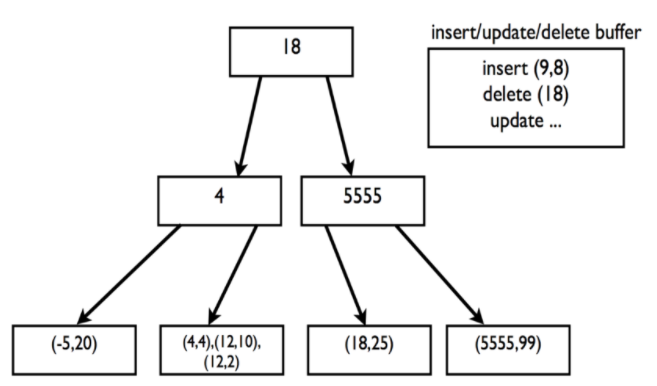

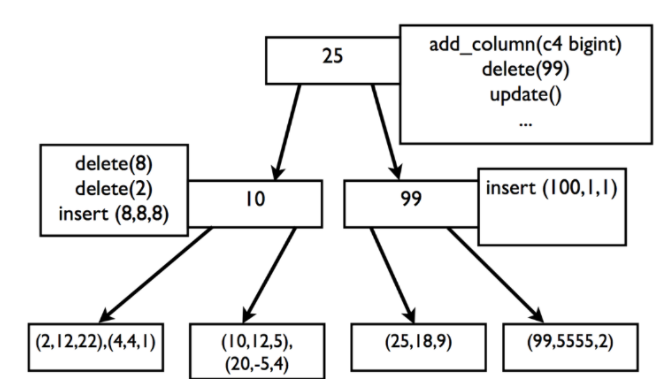

FractalTreeIndex is a data structure based on B-Tree. According to the benchmark given by the developer, this data structure has better performance than B-Tree. Fractaltree supports information caching on non-leaf nodes. Tokudb, MySQL's high-performance storage engine, uses Fractaltree.

As shown in the figure above, in FractalTree, any operations you perform, such as adding columns, deleting columns, inserting, updating, etc., will be stored as operation messages on non-leaf nodes. Since the operation is simply stored in the cache or any secondary index buffer (secondaryindexbuffer), all operations will be executed quickly. When the cache of a node is full, these operation messages will be passed from the root node to the non-leaf nodes in turn to the leaf nodes. The leaf node still stores the real data. When reading, the read operation will consider all operation messages on the query path node to obtain the true data state. But since tokudb will try its best to cache all non-leaf nodes in memory, this process is also fast.

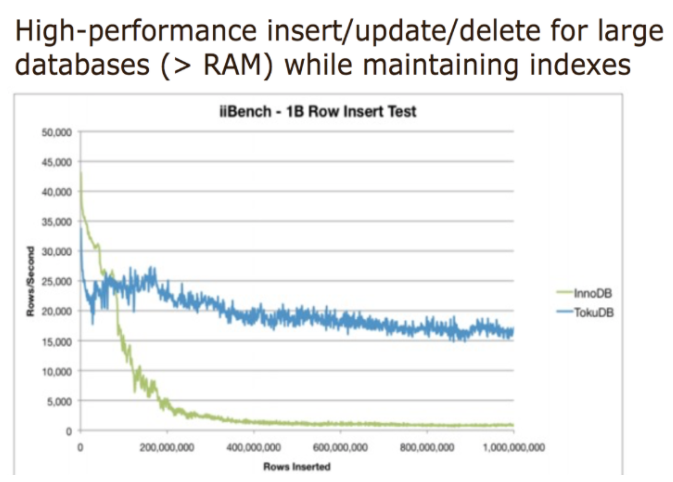

The block in tokubd can reach a maximum of 4MB instead of the 16KB in InnoDB. Such a size can allow more data to be loaded or written back in one I/O operation, which also helps to compress more data at a time to reduce the storage size of the data on the disk. Therefore, tokudb emphasizes that a larger block size can achieve better data compression and less disk I/O. Tokudb claims that their storage engine is faster than InnoDB and provides faster read and write throughput than InnoDB. Tokudb also claims that it has fewer fragmentation problems, and it also supports multi-cluster indexes. The following figure is the relevant statistical chart of the benchmark:

Only the benchmark in your system can help you determine the correct data points and demand solutions. But MySQL's storage engine will continue to improve and support emerging needs. The LSM tree is a system for high-write scenarios, while the B-tree is for traditional scenario applications. The Fractal tree index improves some of the defects of the B-tree index. Therefore, there will be continuous technological innovations in the future, including database storage technology, hardware, disk drives and operating systems, let us wait and see.